In March 2025 a 24-minute anime called Twins Hinahima premiered on Japanese television. There are literally thousands of episodes of anime produced every year, but Twins Hinahima is unique for one reason. The production team claims that “over 95% of animation cuts” were AI-assisted or generated.

Of course, I had to investigate.

I tracked down a copy to see it for myself and researched how they integrated AI into the pipeline. Unsurprisingly, that 95% claim is more than a bit misleading.

So, today we’ll walk through the production process for Twins Hinahima, I’ll give you my impression as a director of animated TV myself, and then we’ll discuss what this might mean for future animation projects in Japan and beyond.

The Backstory

Twins Hinahima follows two young TikTok-style creators who dream of becoming viral and end up traveling to a strange alternate reality that might just grant their wish. This one-off anime was produced as a collaboration between established producer Frontier Works (Higurashi), and a new AI-centric studio called KaKa Technology Studio. The production appears to be a proof-of-concept experiment to show how KaKa’s production methods would play out under real-world conditions. The results are definitely a mixed bag.

First, let’s look at what’s not AI here. It’s actually a lot.

All of the scripting, storyboarding, character design, voice acting, sound production, and underlying animation was produced in a conventional way. That means AI was used in only two stages of the pipeline:

Background painting

Style Transfer

So, while 95% of shots were touched by AI, the show is nowhere near 95% AI-made. To be fair, the production has gone out of its way to describe the technology as “supportive AI” and point out that nearly every shot involved human refinement in some way.

Let’s break it down.

Background Painting

It is not uncommon for photographs to be used as background reference in anime productions. In fact, an entire subculture (Seichi junrei) has anime fans seeking out real-world locations depicted in their favourite productions. But Twins Hinahima took this one step further by feeding photographs into Stable Diffusion and having the AI image generator transform the images into anime-style paintings.

During production, artists frequently had to touch up or adjust the AI-generated backgrounds. Producer Naomichi Iizuka noted at the Niigata International Animation Festival that the AI particularly struggled with rendering clouds, one of several instances requiring human intervention.

Style Transfer

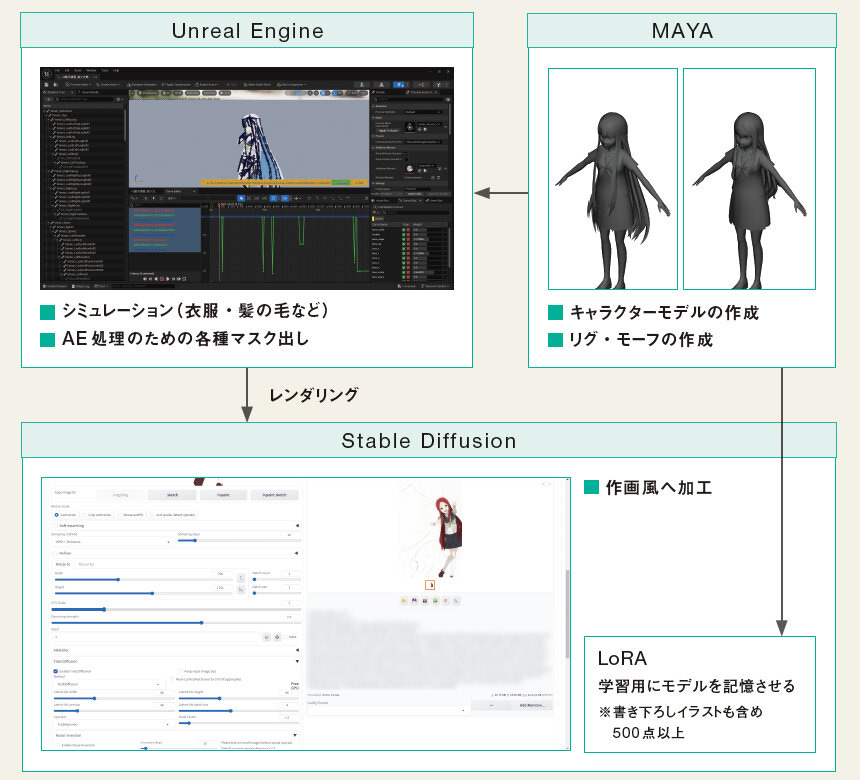

When it came to animation, the production opted to create conventional 3D rigs in Maya and then use motion capture or keyframed animation to create the movement in Unreal Engine. From there, animation frames were processed through Stable Diffusion, mapping the characters’ style onto the 3D animation in order to produce a classic 2D anime look.

To ensure a consistent look, traditional character designs by veteran character designer Takumi Yokota (Pokémon) were used by the team to train a LoRA. A LoRA is a method of fine-tuning an AI image generator to match the style of a given artist. You can read more about LoRAs here. It is often touted as an “ethical” way to use AI image generation tools. However, while LoRAs solve the output problem with image generation, they are still built on top of existing models (in this case Stable Diffusion) which has the “original sin” of scraping copyright material from the internet without permission.

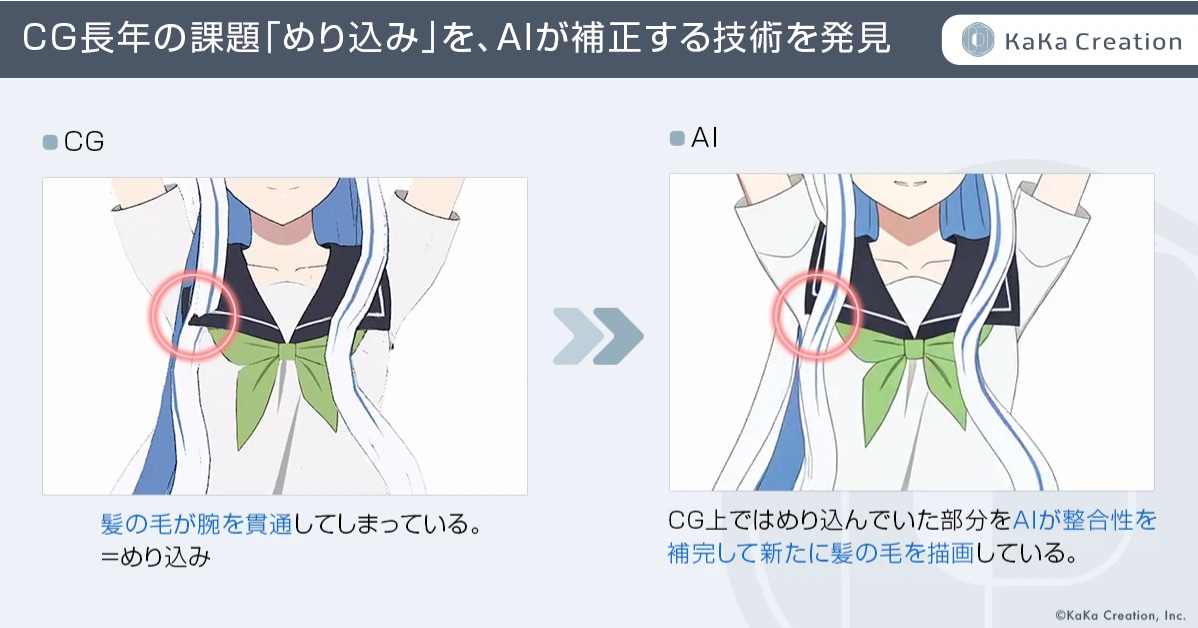

So why not just use a cel-shading render process like so many other anime productions? The producers claim using AI for this part of the production is less costly than other methods and it allows them to utilize AI to fix geometry collisions and other abnormalities instead of adjusting the underlying animation.

Simply put, the AI doesn’t animate anything, it functions more like a render engine. Human animators (or mocap data) still drive every movement, Stable Diffusion just repaints the finished frames so they look hand-drawn.

My Take

First a caveat: As of this writing the producers haven’t released an English sub or dub of Twins Hinahima, so short of crash-coursing Duolingo, I can’t really discuss the story. But as I mentioned earlier, there was no AI used in the writing of the show. So, visuals only from me.

Overall, the final result feels generic. If you’re not looking too closely, you’d probably say, “Yep, that feels like an anime”, but there’s nothing memorable about it. The show doesn’t have the beautiful, closely observed moments of a Miyazaki feature, or the mind-bending visuals of something from Science SARU. It’s just kind of… fine.

When you look closer, you start to see the bigger issues. It’s true that the AI rendering pass can feel more organic than some cel-shading, but it often drifts too organic for the style of the show. There’s an awful lot of jitteriness in the inbetweens, especially in the hair or any time a camera move is involved. These are issues that would immediately get flagged during QC on any show I’ve directed. And given how AI has baked those issues into the frames, it’s not immediately clear how you would fix it.

Perhaps more than those technical issues, the use of Stable Diffusion creates a very inconsistent sense of art direction for the show. Given how odd AI generated images can be, you might assume that when the story goes into the surreal realm the technology would shine. But it’s actually the reverse. The odd elements seem disjointed with no overall sense of cohesiveness, and it ends up feeling like a sloppy pastiche. Wait, haven’t I seen a cat-bus before?

As I watched, I could easily spot the poses crafted by human hands, offering a welcome respite from the show's otherwise generic and inconsistent feel. I walked away feeling less impressed by the AI and more relieved whenever something genuinely human appeared on screen.

The Future of Anime

So, did the experiment fail? The producers claim that productions made with AI in this way could reduce the budget significantly, and in this industry money talks. But given the state of the AI tools at the time Twins Hinahima was made (late 2024) this method doesn’t seem ready for the mainstream. That doesn’t mean the tech won’t get there eventually; we’re currently in a blindingly fast time for AI progress.

It's important to note that the work of KaKa Technology Studio isn’t an outlier here. Many of the major anime studios including Toei Animation (One Piece) have announced partnerships with tech firms to bring AI into their pipelines. Still, fan backlash to AI is real and producers are taking note.

Like the characters in Twins Hinahima, we're entering a strange new world, shaped by competing forces. Let’s hope we get more Miyazaki cat-bus and less AI slop-bus in the future.

Seeya next time,

Matt Ferg.

Fascinating deep dive, Matt. I loved your blend of technical insight and directorial honesty.