When the first Toy Story movie came out, I was convinced it was going to be garbage. Up to that point, the only CGI I had seen were flying logos and geometric shapes rotating on endless grids. I could not imagine wanting to sit through 90 minutes of that.

Dear reader, I was wrong.

At that time, I couldn’t see beyond the current state of the technology to imagine what was possible. That’s sort of where I’m at with a new area of generative AI known as motion synthesis. The term refers to an AI model that outputs editable 3D data rather than pixels. The result is conventional animation keys that can be adjusted inside existing CG software packages. It’s a totally different paradigm from text-to-video models like Google’s Veo which spit out final frames that can’t be edited.

The results so far can seem a bit like glorified previz. But it feels like this tech could turn into much, much more. I’m fighting my pre-1995 Toy Story bias over here.

I have previously written about Cartwheel, a promising company that uses the same type of GenAI, and there are other startups in the space as well. But when Autodesk released this functionality inside Maya through a tool called MotionMaker, I took notice. It seems to me that having this technique native to a major animation software package could be a game changer if - and this is a big if - the results continue to expand and improve.

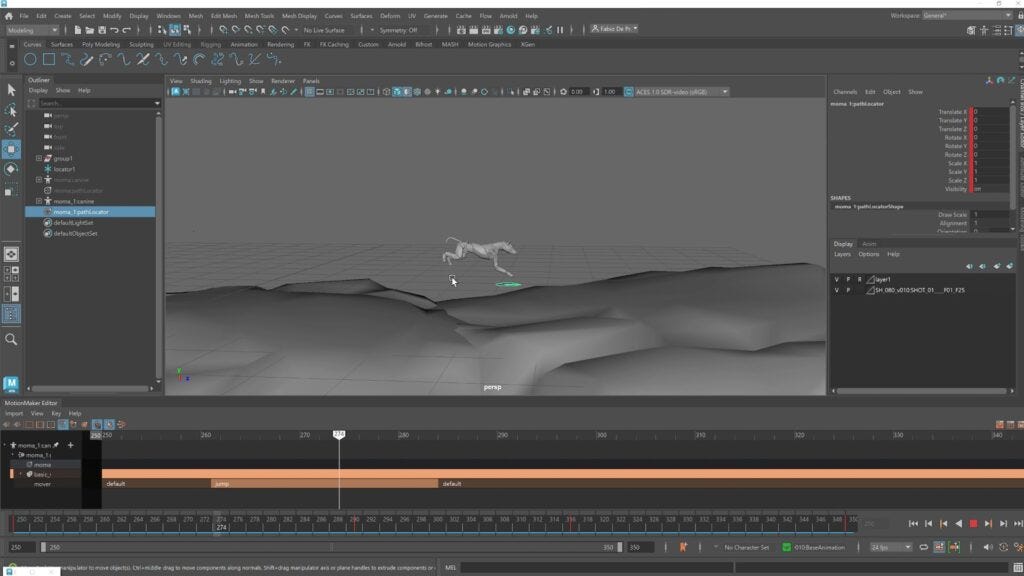

Last week I attended a webinar hosted by Autodesk where I was able to see a demo of MotionMaker and ask questions to its lead researcher, Evan Atherton. I thought I’d take some time to outline what the tool is, how it works, and why it might totally upend how we create animation and VFX. This post is specifically about MotionMaker, but a lot of the ideas here apply to the other motion synthesis tools out there.

“We can use AI in an artist-centric way to make animators’ jobs a little bit easier”

- Evan Atherton

What is MotionMaker?

MotionMaker was released in June 2025 as part of Maya2026. The tool allows an animator to plot a path for a rig in Maya, and it will generate locomotion (either human or dog) along that track using machine learning. Additionally, a limited library of other movements such as jumps can be added, and the character will transition into that action in a natural way. Autodesk describes the process as providing high-level direction for a scene. You can think of it as guiding actors instead of keyframing every pose.

The important part is that after the AI generates the motion, you are left with a rigged character inside of Maya that can be manipulated.

How It Works

The technology behind MotionMaker is based on earlier research done by scientists at the University of Edinburgh in 2017 and 2018. In contrast to big AI models like Sora, motion synthesis needs very little input to train on. In fact, the MotionMaker model was trained after just 30-40 minutes of time spent on the motion capture stage.

I’ve written before about how important ethical training is when it comes to AI. When I asked the Autodesk team to clarify what data they used to train their model, this was their response:

“Only on our motion capture data - we own the data but are making it freely available for anyone to use for any purpose. No other data was used to train. We wanted to make sure the training data was clean and not subject to IP issues.”

Once the model is trained using a collection of neural networks, the system can predict the next pose on the rig in the same way that LLMs like ChatGPT predict the next word in a sentence. When it’s all put together you end up with uniquely generated motion that moves the character where you need in a natural way. This is not the same as using libraries of animated clips or walk cycles because the tool has learned how to create new motion based on the shape of the path and the speed of the motion. The result is meant to feel more organic than traditional motion libraries.

Features

Here are some of the key features of the tool available now:

RE-TARGET - Animation generated from MotionMaker can be re-targeted to your show-specific animation rig.

GENERATE LOCALLY - All animation is generated locally. No confidential IP gets sent to Autodesk’s server on a cloud somewhere.

SPEED RAMP - Actions can be sped up and slowed down in a natural way meaning the character will realistically move from a walk to a run rather than just compressing keyframes.

REDUCE FOOT SLIP - A procedural tool to reduce foot slip on the walks can be applied after generation. This is definitely just a reduction, not a perfect fix.

Limitations

And the very real limitations of the software as of today:

CHARACTERS - Out of the gate, this tool only works with 2 human-type movements and dog locomotion. Aside from walking and running, there are limited additional movements.

STYLE - The dataset is entirely made up of mo-cap done by Autodesk, so you are stuck with actions that have a very realistic, motion capture feel. No cartoony animation yet.

TERRAIN - The tool works on totally flat ground planes and slight inclines, but not in rocky or uneven terrains.

START POSES - The tool works best if your start pose is close to the locomotion action (a standing pose leading into a run for example) but when the pose is quite different, the tool has a hard time interpolating into the generated animation.

The Future

MotionMaker’s current output may feel limited, but Autodesk stressed in the webinar that they see this as the beginning. Their future plans include:

Letting artists train the model on their own mocap or key-framed data. This is a big one!

Extending the tech to full facial rigs.

Generating motion between bespoke key poses.

Capturing a wider palette of locomotion to their dataset including flight (I’m not sure who’s going to be attaching mo-cap trackers on the budgies.)

How it could fit into a production workflow

This tech definitely wouldn’t be a good fit for every shot, or every production. But looking ahead to the near-term future, this is how I could see MotionMaker (or any other motion synthesis tool) used in production after just a bit more development.

Imagine a second season of a mid-tier CG animated series (greenlighting anything might feel like fantasy at this moment, but stick with me here). There are already 13 half-hours of of your characters beautifully moving in their distinct style, lovingly animated by the first season crew. The tool could train on that first season dataset to learn how to animate each character unique to your show over a variety of different actions.

When an animator is given a shot in season two, they might start by blocking it out more like live action. They would indicate a starting mark and chart a path. Maybe they would direct one character to walk over to a chair and take a seat while another character barges through a door and falls on their face. Very quickly the tool would produce a base layer of animation that covers the basic locomotion for the scene, all animated in the show-approved style.

Would the shot be finished? Far from it, but now the animator would be spending all of their time on performance and nuance, not on locomotion.

After even more development maybe the AI could read and interpret a storyboard panel to give suggestions for camera and asset placement. An automated lipsync pass doesn’t feel that far off either. All of these things would remain in the animator’s control because we’re dealing with CG assets, not final video frames.

That’s not to say it’s going to be some utopian animated future. An optimist might argue that this tech might allow smaller budget shows to be more ambitious in terms of scale and scope, but the reality could easily result in massive layoffs for animators.

We might still be in the flying logo stage of this technology today, but we should all pay close attention because rotating shapes can become Toy Story before we know it. And the more we understand the emerging technology, the more we can find a way to be part of the future.

Have you had the opportunity to try any of these motion synthesis tools? I would love to hear your first-hand impressions.

Seeya next time,

Matt Ferg.

p.s. For a more in-depth look into the science behind this motion synthesis, you can check out Evan Atherton’s talk from SIGGRAPH 2024.