With so much AI news swirling around the entertainment industry, it’s hard to separate what’s really happening from the smoke and mirrors pushed by the hype merchants. So, over the next few weeks, I’ll be releasing a three-part “State of the Art” series that looks at the verified, documented ways AI is actually being used in production today.

My focus will be on where AI has made its way into mainstream studios and shows, not my personal guesses, not speculation about what might happen someday, and not the experimental indie projects that are pushing boundaries on a smaller scale. My goal is to give an honest, level-headed view of how the technology is working inside real pipelines for real professionals.

Here’s the plan:

PART 1: Animated film & television

PART 2: VFX

PART 3: Gaming

I hope these posts provide something of a baseline that we can revisit in a few months to track how AI expands (or retracts).

As of September 2025, here’s where AI has a confirmed role in professional animation production:

Writing

Protections won by the Writer’s Guild of America (and other unions) have meant that there is very little documented and credited use of AI in animation writing as of today. The one famous exception is South Park which satirized the use of AI in the 2023 episode titled “Deep Learning” that was credited as “Written by Trey Parker & ChatGPT”.

Voice Acting

Union protections have also kept AI out of North American recording booths for now.

However, tools like Toon Boom’s Ember AI have enabled more teams to create temporary AI scratch dialogue for animatics. While this placeholder VO is eventually replaced by a human actor, it can cause friction in production, as UK voice actor Emma Clarke recently pointed out:

I’ve seen it happen too often: I join a session, record the final VO to brief, following the client's thoughtful direction…aaaand it "doesn’t fit." Why? Because the visuals were locked to a robotic placeholder, not a real human voice.

Design

Design is one area where AI has begun to take root, though often its use is being kept under wraps.

Reports suggest tools like Midjourney are being used during the concept stage, even if studios don’t always disclose it. While the Animation Guild contract requires producers to notify crews when new generative tools are introduced, some productions have skirted this rule as reported by Lila Shapiro of New York Magazine.

One animator who asked to remain anonymous described a costume designer generating concept images with AI, then hiring an illustrator to redraw them — cleaning the fingerprints, so to speak. “They’ll functionally launder the AI-generated content through an artist,” the animator said.

Veteran character designer Carlos Grangel (Corpse Bride, Hotel Transylvania) has noted that he now competes with designers using AI on major productions:

Not all use of AI in design has been so hidden from view. Early in the GenAI debate, Marvel stepped into controversy when it used AI-generated images for the animated opening titles to their Secret Invasion Disney+ series. In Japan, some studios have openly discussed using AI to transform photographs into anime-style background art. Examples include Twins Hina Hima and Netflix’s short The Dog & Boy.

AI-powered inpainting and outpainting are also gaining traction, especially with “ethical” tools such as Adobe Firefly or the image generators integrated into Toon Boom’s Ember AI. These techniques allow artists to extend a background or remove elements without having to repaint everything by hand.

The UK animation studio Blue Zoo has been transparent about using AI in this way. During an interview with Kids Media Club podcast, co-founder Tom Box explained how they came around to allowing AI for image generation after initially deciding it would never find its way into production art:

Some of our artists were using tools like Adobe Firefly’s Photoshop generative fill and they said, “Do I seriously have to spend six hours doing this tedious task when I can actually just press one button to do it? Why?” Okay, well then if the tool is using legitimate training data and that has been legally okayed, then we’ll let the artists choose whether they want to speed up their workflows in that sense. And we found that was the fairest way we could think of not feeling like we’re forcing artists to us it, but they can make educated choice on whether they feel like it will help their workflow.

Modeling

In CG animation, AI-assisted modeling tools are getting better and better every day, but very few mainstream studios have gone on record about their use. One exception is Myth Studio, which has used the Kaedim system for 3D asset generation on a number of advertising projects.

AI-generated 3D assets are much more prevalent in game production. Stay tuned for more on that in part three of this series!

Animation

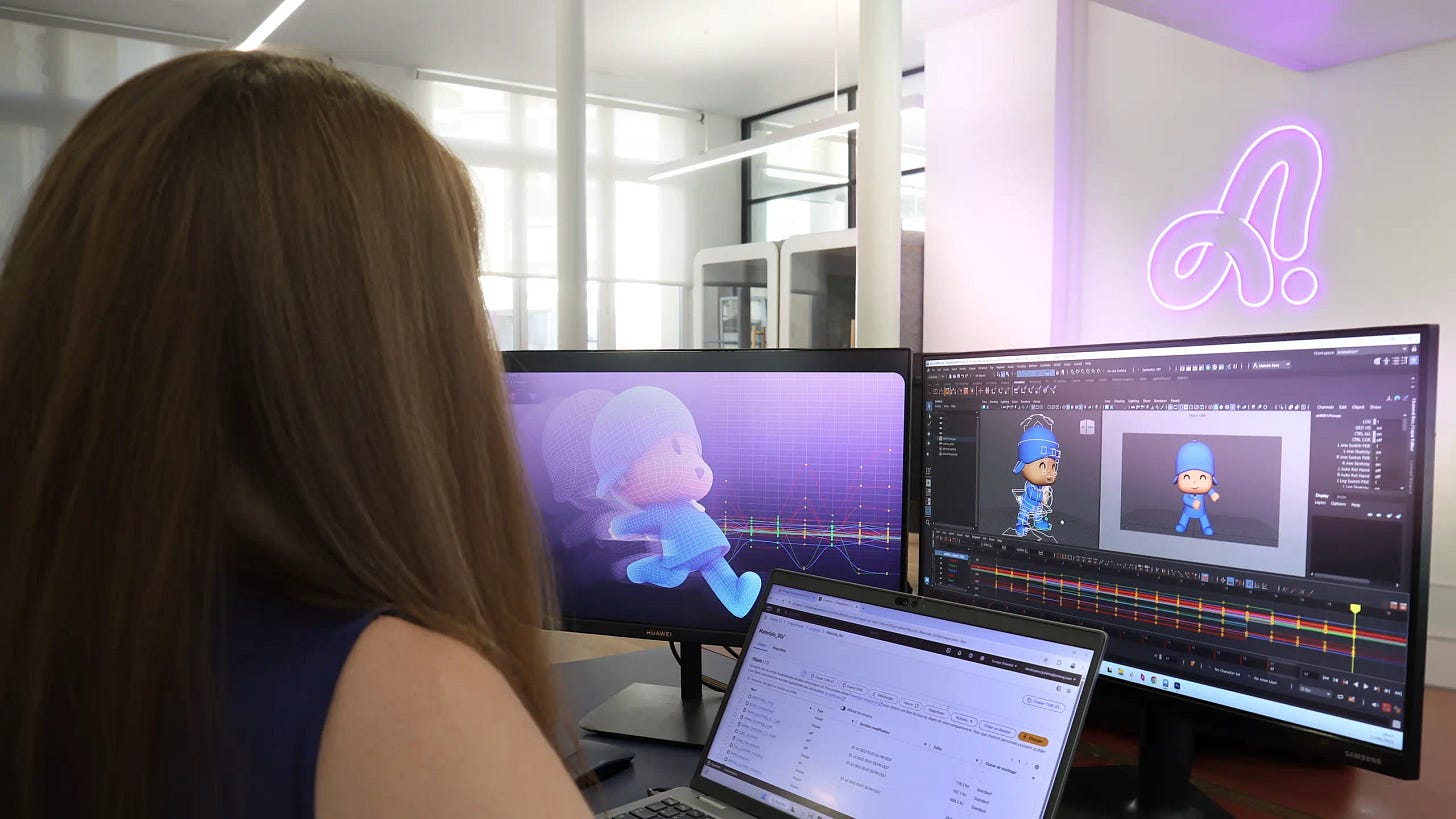

French studio Animaj has emerged as one of the leaders in applying AI to the animation stage of the process. On the current season of Pocoyo, its proprietary Sketch-to-Pose and inbetweening tools use AI to translate storyboard sketches into character poses in Maya. Motion is then applied to those rigs trained on the distinct show-style from earlier seasons.

In Korea, CJ-ENM has said it used a system called Cinematic AI on its series Cat Biggie. While details are scarce, their description suggests a motion-synthesis approach similar to tools like Cascadeur or Cartwheel, generating movement data for 3D rigs. Baek Hyun-jung, head of AI business and production, explained their process this way:

The key challenge was controlling and expressing the dynamic movements unique to animation. We used our tool, Cinematic AI, to convert the characters into 3D data and train the production system accordingly. This allowed us to achieve a high level of completeness in the final output.

In the anime space, Sony has been moving forward with their AI tools that automate lip sync based on the analysis of audio tracks. The company says this tech has been deployed on four anime productions to date, the most recent being this year’s Utano☆Princesama TABOO NIGHT XXXX.

Ink & Paint

Sony has also integrated AI into its new AnimeCanvas tool. According to its 2025 corporate report, the AI-assisted ink & paint workflow reduces the number of clicks by roughly 15%. That might not seem like much, but it could make a big impact multiplied across the thousands of anime episodes produced each year in Japan.

Compositing

AI-driven style transfer (applying a new look to completed animation) is beginning to show up in more productions. The anime Twins Hina Hima experimented with the approach, with uneven results. Pixar, on the other hand, used AI-powered style transfer extensively in Elemental. The team trained an AI system on hand-painted images to create painterly textures applied to 3D geometry in nearly every shot.

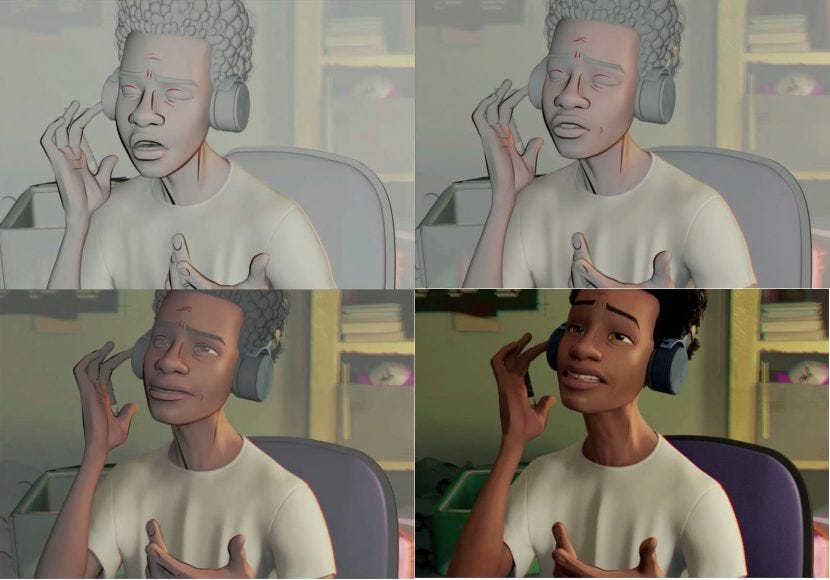

Sony Pictures Imageworks also developed a machine learning tool for the Spider-Verse films that predicts the lines an artist might draw on each frame. Artists can then accept, modify, or replace those lines. The tool sits in a grey zone between generative AI and predictive AI. It’s generative in the sense that it generates artwork that will be seen on screen, but predictive in the sense that, like auto-correct, it is making predictions that artists can either accept or reject.

Either way, these are the sorts of bespoke AI tools that keep artists in control and will likely show up more often in productions.

Rendering

Pixar’s Pete Docter has been public about their use of AI in the rendering stages of the studio’s films. Machine learning is used in denoising which allows frames to be rendered at much faster speeds. It’s not the sexiest use of AI, but it makes a big difference in production and has become standard in feature animation.

Music

While there are reports of AI-generated music appearing in some live-action scores, I wasn’t able to find any animated series or films that have publicly confirmed using AI in their soundtracks. The closest example comes from coverage suggesting that K-pop producer Vince experimented with ChatGPT for inspiration on the song Soda Pop from KPop Demon Hunters. It’s important to note the extent of that use remains unclear and hasn’t been officially credited.

Conclusion

As of September 2025, AI’s role in animated TV and film remains fairly limited. The uses tend to be narrow and targeted, and supplement the work of artist, rather than completely automating the process.

One noted absence from this list are video generation tools like Sora, Veo, or Runway. Studios seem reluctant to adopt these tools because of the thorny legal issues regarding training data and ownership. And as I’ve found in my own tests, they lack of control and consistency making them hard to fit into a production pipeline that depends on feedback and iteration.

We’ll check back in a few months to see how how everything is changing.

In the meantime, check back next week for part two of the State of the Art series where we’ll look at AI in VFX.

Seeya next time,

Matt Ferg.

Hey there! About Animaj, I am really disappointed of their direction with Pocoyo, while I understand their marketing towards preschoolers, this isn't Pocoyo. The way he used to be amazed by even simple objects, his gestures and sounds, the way narrator was present and active helping him, etc. were all pure toddler behaviour, Preschooler Pocoyo just feels wrong for me. That original toddler charm is what made Pocoyo so special to me from the beginning and even helped me through depression. That was the 1-year-old that I lived for, and I am absolutely disappointed and mad at them for aging him up. I really hope they bring the original one back... He was just... So special and unique, there is not a lot of shows like this while there are a dosen preschool shows that season 5 reminds me if and i can name them right now. Pocoyo has been an influence on my life from since I can remember myself, and I want him as he was, babyish, silly, curious, etc. Now i feel lost.

Animaj, please bring toddler Pocoyo back, even a official statement that he is still 1-year-old will mean the world to me

Thanks for reading... feel free to reply to me, i just wana be heard